One thing I get asked about, more than I ever expected, was about how I do some things for CodeReviewVideos.com.

I never expected people to be interested in my set up.

But I get a fair amount of questions, all the same.

One such question has been around my editing process.

I keep things super simple. You may have noticed my lack of Hollywood effects on transitions, and what have you.

Growth

I take a lot of pleasure in recording the videos for the site.

I take a lot of pleasure in recording the videos for the site.

It is truly the best bit.

I started this site because I thought how much further I’d have been, professionally, and personally, if I had just known some of this stuff a few years earlier.

That if I followed this stuff, I could be building systems that emphasised a more decoupled nature, and in doing so, I’d build systems that were extremely pleasant to work with. The code would be an enjoyable place to be.

Which ultimately would give you more freedom to spend time working on the more exciting bits.

Learning React if you’re a back end dev may seem like a pointless pain in the arse. But it could be that your life is overwhelmed with firefighting on the back end, that you don’t ever get the time you’d like to play with the front end.

It may be that once you’ve played around with some simple, yet powerful front end concepts that you’d actually start to love it. There is a lot of fun to be had there.

Behind The Scenes

That’s the stuff I like recording.

But a huge constraint for me recording more videos like that has been in that an equal amount of time or more goes into editing.

This week I decided to take on a dedicated editor.

It’s a big deal for me. I’ve accepted that I can’t do it all.

And because I get asked about my behind-the-scenes process so much, I decided to share some of it. More on that below.

Video Update

This week I recorded two full days of Livestream stuff.

Livestream right now has literally been me recording as normal, but watching myself on a private discord to see just how many secrets I’d accidentally expose. No plot twist here: it would have been a few.

The exercise was very worthwhile. I found out about a little box I could use to control various aspects of the stream like an arcade keypad. Seemed totally overkill.

More realistic is to go with some sort of two screen setup, and record the secondary screen, only dragging into shot what I know is safe to share.

Or an alternative is to go full open infrastructure, and treat it as a total demo environment. I’m not sure, yet. These are some of the enjoyable questions I find myself pondering on the livestream.

It is… unique.

It’s awesome fun though.

https://codereviewvideos.com/course/everyday-linux/video/reverse-incremental-search

Some stuff I take for granted is like wizardry to others.

When I first saw a systems architect use this command whilst debugging some prod issue, I vowed to find out what the command was.

I should have just asked. But at the time, it wasn’t the right environment. And later on I couldn’t articulate what I’d seen.

So now I share this with you. Everyone new who sees this thinks it’s cool. Well, everyone who thinks things like this are cool.

People like you and me 🙂

https://codereviewvideos.com/course/live-stream-broken-link-checker/video/decouple-wizard-from-oz

In the second Livestream video we dive into refactoring to a tested implementation. The design takes on a totally different shape.

It’s really interesting where this leads us too in terms of a coupled, or ideally, more decoupled system.

With the decoupling should come flexibility, and enjoyability.

This one is for you if you’ve ever wondered what it’s like to work with PHPUnit beyond a trivial example.

I’d be keen to read your thoughts as you watch along. Join me on the forum to do so.

https://codereviewvideos.com/course/meta/video/editing-process

This video is one I would have cringed at myself for just a few years back. Now? Life’s too short.

I get asked about my process a lot. Way more than I anticipated. It will be nice to have a video reference to direct those questions towards in the future.

This is also serving as my “on boarding” video for my new video editor.

A video editing video on video editing for a video editor, so they can help me with my video editing.

If I had a moustache, the ends would be receiving a serious amount of whisker curling after such a thing.

Thank You

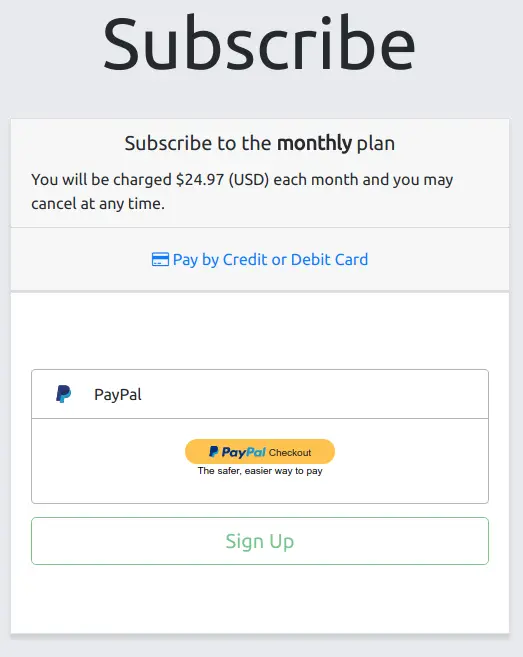

This site is possible thanks to your continued support, for which I am extremely grateful.

If you are an existing subscriber, may I take this opportunity to say a sincerely heartfelt thank you.

If you are not yet a subscriber then you’re missing out on a developer education from someone who wants you to genuinely, and passionately succeed in everything you do.

It’s good stuff and you should check it out.

And don’t just take my word for it:

A great tutorials that provide real day to day solutions and techniques to every Symfony and PHP developer.

– amdouni

Until next week,

Chris

Ok fans of Fonzie that I know you are, I’m going to wish you glad tidings for the weekend.

Ok fans of Fonzie that I know you are, I’m going to wish you glad tidings for the weekend. Then recently – I think maybe a few weeks ago – I came across a really interesting website about GraphQL. It’s called

Then recently – I think maybe a few weeks ago – I came across a really interesting website about GraphQL. It’s called

I had a definitive answer to the first question:

I had a definitive answer to the first question: Video Updates

Video Updates

But of course, over the last few weeks I’ve had these other big site changes (dare I say, improvements) to make. And that has meant I haven’t been recording. Thankfully all that can change, and I can get back to making recording new stuff.

But of course, over the last few weeks I’ve had these other big site changes (dare I say, improvements) to make. And that has meant I haven’t been recording. Thankfully all that can change, and I can get back to making recording new stuff.