OK, hopefully this one is as easy to fix for you as it was for me. Easy being the typical (seemingly?) undocumented “one liner” that makes everything work.

In my example, I had just set up WordPress with ACF, added in ACF to REST API, and JWT Authentication for WP-API.

Essentially that gives a combo of admin UI to create and manage the custom fields, and then a REST API endpoint to GET, PUT, POST, etc.

The docs for getting the JWT Authentication plugin set up are good enough. Just a couple of additional define statements required in wp-config.php. Hopefully you have that sorted, and can log in:

curl -X POST \

https://example.com/wp-json/jwt-auth/v1/token \

-d '{

"username": "my_wp_user",

"password": "my_wp_users_password"

}'

// should return response, e.g.

{

"token": "aVeryLongStringHere",

"user_email": "admin@example.com",

"user_nicename": "my_wp_user",

"user_display_name": "Admin Person Name"

}Sweet. So far, so good.

Next, you need to add in at least one ACF custom field via your admin UI.

I’ve gone with a simple text field.

Even without authenticating, you should be able to hit your WP REST API and see the extra stuff added in by ACF:

curl -X GET \

https://example.com/wp-json/wp/v2/pages/9

{

"id": 9,

"date": "2020-06-17T18:41:01",

"date_gmt": "2020-06-17T17:41:01",

"guid": {

},

"modified": "2020-06-25T09:13:20",

"modified_gmt": "2020-06-25T09:15:44",

"slug": "my-post-slug",

"status": "publish",

"type": "page",

"link": "https://example.com/whatever/something",

"title": {

},

"content": {

},

"excerpt": {

},

"author": 1,

"featured_media": 0,

"parent": 8,

"menu_order": 0,

"comment_status": "closed",

"ping_status": "closed",

"template": "page-templates/my-page-template.php",

"meta": [],

"acf": {

"example_field": ""

},

"_links": {

}

}I’ve trimmed this right down. The interesting bit is the acf key / value. Make sure you see it.

The Problem

Things start to get interesting from here on in.

In order to update the post / page / whatever, we will need to be authenticated. We authenticated earlier, and got back a token. We need to pass that token as a Header on any requests that want to add / update data.

This is really easy – just add the header key of Authorization, and the value of Bearer your_token_from_earlier_goes_here. An example follows below.

But more importantly, as best I can tell, we need to use a special endpoint to update ACF fields.

For regular WP data, you might POST or PUT into e.g. https://example.com/wp-json/wp/v2/pages/9 to update data. Technically, PUT is more accurate when updating, and POST is for initial creation, but either seem to work.

curl -X POST \

https://example.com/wp-json/wp/v2/pages/9 \

-H 'authorization: Bearer aVeryLongStringHere' \

-d '{

"slug": "my-updated-slug"

}'

// which should return a full JSON response with the all the data, and the updated slug.But if you try to update ACF this way, it just silently ignores your change:

curl -X POST \

https://example.com/wp-json/wp/v2/pages/9 \

-H 'authorization: Bearer aVeryLongStringHere' \

-d '{

"acf": {

"example_field": "some interesting update"

}

}'

// it should 'work' - you get a 200 status code, and a full JSON response, but 'example_field' will not have updated.My intuition says that seeing as we followed the response structure, this should have worked. But clearly, it doesn’t.

But it turns out that we need to use a separate endpoint for ACF REST API updates:

curl -X GET \

https://example.com/wp-json/wp/v2/pages/9

// note again, no auth needed for this GET request

{

"acf": {

"example_field": ""

}

}So this works, too. This only returns the acf field subset. But still, confusingly, the top level key is acf. And that’s what threw me.

See, if we try to make a POST or PUT following this shape:

curl -X POST \

https://example.com/wp-json/wp/v2/pages/9 \

-H 'authorization: Bearer aVeryLongStringHere' \

-d '{

"acf": {

"example_field": "some interesting update"

}

}'

// fails, 500 error

{

"code": "cant_update_item",

"message": "Cannot update item",

"data": {

"status": 500

}

}And the same goes for if we drop the acf top level key:

curl -X POST \

https://example.com/wp-json/wp/v2/pages/9 \

-H 'authorization: Bearer aVeryLongStringHere' \

-d '{ example_field": "some interesting update" }'

// fails, 500 error

{

"code": "cant_update_item",

"message": "Cannot update item",

"data": {

"status": 500

}

}The Solution

As I said at the start, this is a one liner. And as far as I could see, it’s not *clearly* documented. Maybe it’s in there somewhere. Maybe if you use WP day in, day out, you already know this. I don’t. And so I didn’t.

Hopefully this saves you some time:

curl -X POST \

https://example.com/wp-json/wp/v2/pages/9 \

-H 'authorization: Bearer aVeryLongStringHere' \

-d '{

"fields": {

"example_field": "some interesting update"

}

}'

// works, 200, response:

{

"acf": {

"example_field": "some interesting update"

}

}And lo-and-behold, that works.

I think it’s super confusing that the field key is used on create / update, but the acf key is returned on read. Admittedly I have done this myself on API’s before – but as a consumer I now realise how unintuitive and frustrating that is.

I had a definitive answer to the first question:

I had a definitive answer to the first question: Video Updates

Video Updates

But of course, over the last few weeks I’ve had these other big site changes (dare I say, improvements) to make. And that has meant I haven’t been recording. Thankfully all that can change, and I can get back to making recording new stuff.

But of course, over the last few weeks I’ve had these other big site changes (dare I say, improvements) to make. And that has meant I haven’t been recording. Thankfully all that can change, and I can get back to making recording new stuff.

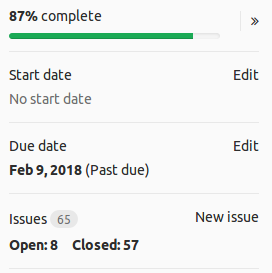

Behind the scenes over the past 10 weeks I have been working on integrating CodeReviewVideos with Braintree.

Behind the scenes over the past 10 weeks I have been working on integrating CodeReviewVideos with Braintree.