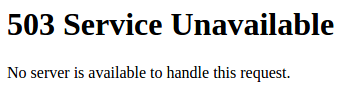

One annoyance I’ve hit whilst running ELK on Docker is, after rebooting my system, the same error keeps returning:

* Starting periodic command scheduler cron

...done.

* Starting Elasticsearch Server

...done.

waiting for Elasticsearch to be up (1/30)

waiting for Elasticsearch to be up (2/30)

waiting for Elasticsearch to be up (3/30)

waiting for Elasticsearch to be up (4/30)

waiting for Elasticsearch to be up (5/30)

waiting for Elasticsearch to be up (6/30)

waiting for Elasticsearch to be up (7/30)

waiting for Elasticsearch to be up (8/30)

waiting for Elasticsearch to be up (9/30)

waiting for Elasticsearch to be up (10/30)

waiting for Elasticsearch to be up (11/30)

waiting for Elasticsearch to be up (12/30)

waiting for Elasticsearch to be up (13/30)

waiting for Elasticsearch to be up (14/30)

waiting for Elasticsearch to be up (15/30)

waiting for Elasticsearch to be up (16/30)

waiting for Elasticsearch to be up (17/30)

waiting for Elasticsearch to be up (18/30)

waiting for Elasticsearch to be up (19/30)

waiting for Elasticsearch to be up (20/30)

waiting for Elasticsearch to be up (21/30)

waiting for Elasticsearch to be up (22/30)

waiting for Elasticsearch to be up (23/30)

waiting for Elasticsearch to be up (24/30)

waiting for Elasticsearch to be up (25/30)

waiting for Elasticsearch to be up (26/30)

waiting for Elasticsearch to be up (27/30)

waiting for Elasticsearch to be up (28/30)

waiting for Elasticsearch to be up (29/30)

waiting for Elasticsearch to be up (30/30)

Couln't start Elasticsearch. Exiting.

Elasticsearch log follows below.

[2017-07-14T08:36:42,337][INFO ][o.e.n.Node ] [] initializing ...

[2017-07-14T08:36:42,437][INFO ][o.e.e.NodeEnvironment ] [71cahpZ] using [1] data paths, mounts [[/var/lib/elasticsearch (/dev/sde2)]], net usable_space [51.1gb], net total_space [146.6gb], spins? [possibly], types [ext4]

[2017-07-14T08:36:42,438][INFO ][o.e.e.NodeEnvironment ] [71cahpZ] heap size [1.9gb], compressed ordinary object pointers [true]

[2017-07-14T08:36:42,463][INFO ][o.e.n.Node ] node name [71cahpZ] derived from node ID [71cahpZ4SjeKKAoH8X5dYg]; set [node.name] to override

[2017-07-14T08:36:42,463][INFO ][o.e.n.Node ] version[5.3.0], pid[64], build[3adb13b/2017-03-23T03:31:50.652Z], OS[Linux/4.4.0-45-generic/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/1.8.0_121/25.121-b13]

[2017-07-14T08:36:43,910][INFO ][o.e.p.PluginsService ] [71cahpZ] loaded module [aggs-matrix-stats]

[2017-07-14T08:36:43,911][INFO ][o.e.p.PluginsService ] [71cahpZ] loaded module [ingest-common]

[2017-07-14T08:36:43,911][INFO ][o.e.p.PluginsService ] [71cahpZ] loaded module [lang-expression]

[2017-07-14T08:36:43,911][INFO ][o.e.p.PluginsService ] [71cahpZ] loaded module [lang-groovy]

[2017-07-14T08:36:43,911][INFO ][o.e.p.PluginsService ] [71cahpZ] loaded module [lang-mustache]

[2017-07-14T08:36:43,911][INFO ][o.e.p.PluginsService ] [71cahpZ] loaded module [lang-painless]

[2017-07-14T08:36:43,911][INFO ][o.e.p.PluginsService ] [71cahpZ] loaded module [percolator]

[2017-07-14T08:36:43,911][INFO ][o.e.p.PluginsService ] [71cahpZ] loaded module [reindex]

[2017-07-14T08:36:43,911][INFO ][o.e.p.PluginsService ] [71cahpZ] loaded module [transport-netty3]

[2017-07-14T08:36:43,911][INFO ][o.e.p.PluginsService ] [71cahpZ] loaded module [transport-netty4]

[2017-07-14T08:36:43,911][INFO ][o.e.p.PluginsService ] [71cahpZ] no plugins loaded

[2017-07-14T08:36:45,703][INFO ][o.e.n.Node ] initialized

[2017-07-14T08:36:45,703][INFO ][o.e.n.Node ] [71cahpZ] starting ...

[2017-07-14T08:36:45,783][WARN ][i.n.u.i.MacAddressUtil ] Failed to find a usable hardware address from the network interfaces; using random bytes: 58:01:4e:51:11:f3:c9:da

[2017-07-14T08:36:45,835][INFO ][o.e.t.TransportService ] [71cahpZ] publish_address {172.20.0.3:9300}, bound_addresses {0.0.0.0:9300}

[2017-07-14T08:36:45,839][INFO ][o.e.b.BootstrapChecks ] [71cahpZ] bound or publishing to a non-loopback or non-link-local address, enforcing bootstrap checks

[2017-07-14T08:36:45,840][ERROR][o.e.b.Bootstrap ] [71cahpZ] node validation exception

bootstrap checks failed

max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

[2017-07-14T08:36:45,842][INFO ][o.e.n.Node ] [71cahpZ] stopping ...

[2017-07-14T08:36:45,909][INFO ][o.e.n.Node ] [71cahpZ] stopped

[2017-07-14T08:36:45,909][INFO ][o.e.n.Node ] [71cahpZ] closing ...

[2017-07-14T08:36:45,914][INFO ][o.e.n.Node ] [71cahpZ] closed

The core of the fix to this problem is helpfully included in the output:

max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

And on Ubuntu fixing this is a one-liner:

sudo sysctl -w vm.max_map_count=262144

Only, this is not persisted across a reboot.

To fix this permenantly, I needed to do:

sudo vim /etc/sysctl.d/60-elasticsearch.conf

And add in the following line:

vm.max_map_count=262144

I am not claiming credit for this fix. I found it here. I’m just sharing here because I know in future I will need to do this again, and it’s easiest if I know where to start looking 🙂

Another important step if persisting data is to ensure the folder is owned by 991:991 .