There’s a phrase here in the UK:

Ship-shape and Bristol fashion

Meaning that everything should be in excellent working order.

The majority of this week has been getting two of my “legacy” projects into a shape I can ship.

Ship-shape, if you will.

There’s a fine line with side-projects, in my experience, between doing everything right, and getting things done.

Now, don’t get me wrong. If you cut corners, inevitably you will have to pay the price if the project is successful.

The reason I’m willing to cut a few corners on side-projects is that I have only a rough idea as to whether an idea will be successful or not.

Honestly, this is a whole different can of worms, the lid on which I wish to remain closed.

Just a few sentences ago I referred to my projects as “legacy”. These are very active projects, but up until very late last week, they had no tests.

I am unable to attribute this quote to anyone in particular, but I have heard it repeated by many:

Legacy code is any code without tests.

And I would have to agree. You just cannot be absolutely sure how code actually works unless it is tested.

Now, adding tests is good. I think the vast, vast majority will agree on this.

But what else can we add to make sure our code is – and importantly, remains – Ship-shape?

Security Advisories

I like the Roave team. They are good dudes. Also, they have a nice doggy logo.

I add their roave/security-advisories to every project I work with.

All you need to do is:

composer require roave/security-advisories:dev-master

And you’re using this package in your project.

So what does it do?

It stops you from accidentally installing any packages with known security vulnerabilities.

Very handy, and as it’s a one-liner, it’s a super simple, immediately beneficial addition to your project.

Symfony Security Check

Paranoid?

Not overly concerned about burning through a few extra CPU cycles?

Rather than just relying on the Roave team, I use a tool provided by the good folk at SensioLabs to double check my work.

Built into Symfony is the Security Checker, and with two new lines in composer.json I can check my deps again:

"scripts": {

"post-install-cmd": [

"php bin/console security:check"

],

"post-update-cmd": [

"php bin/console security:check"

]

},

I’ve removed the other stuff for brevity.

What I hope to see is something like this:

php bin/console security:check

Symfony Security Check Report

=============================

// Checked file: /path/to/my/project/composer.lock

[OK] No packages have known vulnerabilities.

! [NOTE] This checker can only detect vulnerabilities that are referenced in the SensioLabs security advisories

! database. Execute this command regularly to check the newly discovered vulnerabilities.

Phew.

Static Analysis

I use a small number of Static Analysis tools.

I’d like to use Phan but I have not yet successfully managed to get a run to pass.

I use a PHPStorm plugin – PHP Inspections EA Extended – to catch issues in real time as I code.

This is a really useful tool that offers a bunch of advice as I code – a bit like having a super knowledgeable PHP guru watching over my shoulder, only without the coffee breath.

This is a good start, but I also make use of two other tools.

PHP Mess Detector is a tool I’ve used for as long as I can remember. I use a very small rule subset, as I find it can be extremely noisy if I turn on too many checks.

My favourite part of PHPMD is the Cyclomatic Complexity test. With such a computer science textbook name, it might be one you overlook.

Code with a high Cyclomatic Complexity score indicates to me that I need to rethink my implementation. Usually this involves breaking down a bunch of if statements, or for loops until things become much more maintainable. This is one of those tasks that takes extra time now, but for which you are thankful to yourself later.

PHPStan was the tool I went with after I struggled to get Phan working.

Generally PHPStan finds a bunch of errors and mistakes I’ve made that are not immediately obvious. Stuff like using too many parameters on function calls, or using invalid typehints, missing constants… that sort of thing.

You might be thinking: How the heck do you miss a constant? And that’s a good call. However, in the early stages of a project where things are more fluxxy than Emmett Brown’s flux capacitor then these things do happen.

If you work in a team, having a few of these tools in place can ensure everyone is “singing from the same hymn sheet”. In other words, whilst everyone has their own style, the overall look and feel of the codebase should be to some standard guidelines.

PHPSpec

I use PHPSpec as a big part of my TDD workflow.

Typically I will write a Behat feature file first, as this gives me a high level overview of what needs to happen for this feature to be complete.

Once I have the failing feature file I can drill down into the individual scenario steps to make that feature pass.

Most often I work with JSON APIs, so the Behat scenario steps are largely already done. By which I mean most scenarios look something like this:

Scenario: User can GET their personal data by their unique ID

When I send a "GET" request to "/users/u1"

Then the response code should be 200

And the response header "Content-Type" should be equal to "application/json; charset=utf-8"

And the response should contain json:

"""

{

"id": "u1",

"email": "peter@test.com",

"username": "peter"

}

"""

The response (and POST body, not shown) are text entries so can change without having to write new step definitions.

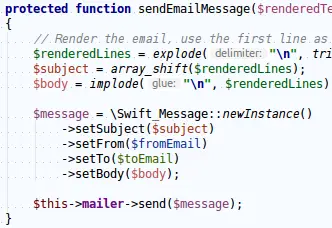

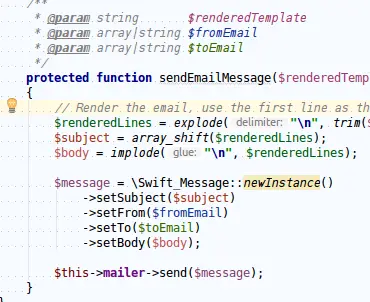

How the response is created is where PHPSpec comes in.

Beyond the basics, a controller will hand off to one or more services which do the real work.

Those services are both written with the guidance of, and tested by PHPSpec.

PHPSpec is an unusual tool. It sells itself as a highly opinionated tool for helping shape the design of your code. If you are in agreement with the opinions it holds then there is no better tool for TDD in PHP, in my opinion.

That’s a lot of opinion 🙂

One of the advantages of switching to PHPSpec is that it steered me away from having unit tests that touched an actual database. This was something I used to do frequently, and would slow down my unit tests.

Anyway, the upshot of all of this is that I expect my entire unit test suite to complete well within 60 seconds, and more preferably within less than 20 seconds. This isn’t always the case, but it’s my target.

Behat

As mentioned, I use PHPSpec in conjunction with Behat.

Behat serves a dual purposes for me.

It is my greenlight for my project behaving as expected in as-close-to-real-world as it gets.

But equally as importantly, it is my living documentation.

I can refer back to any Behat scenario to understand how a particular endpoint is supposed to work. I can then either run that test individually, or pull out the relevant parts of the test and use it to make a manual request using Postman.

Even nicer is that if needed, I can refer other developers to the documentation to help them understand how the system should behave.

It’s not all roses though.

Unfortunately my Behat tests take a long time to run. By which I am talking between 3-10 minutes on most projects.

Whilst working on individual scenarios I use a lot of tagging to run only a subset of my overall test suite.

However, before I merge back to master, I want to make sure I haven’t inadvertently broken anything else. It’s surprising how often this happens.

That said, I don’t want to tie up my own computer running the full test suite every time I push some code. Not only is this a huge productivity killer, it’s also something I tend to forget to do.

Therefore it makes sense to offload this task to an army of mindless automatons. Or GitLab CI runners, to you and me.

If you’d like to see an example of a project that uses Behat and PHPSpec, I have code samples and a full course here.

Continuous Integration

In my experience, Continuous Integration has been a real pain to get setup.

I’m still not 100% happy with my setup. I guess it’s all about the gradual improvements.

If you’re unsure of what I mean by Continuous Integration – from here on referred to as CI – here is what I mean:

Throughout the day I work on my code.

Every time I make significant progress, I do some sort of git related task (commit, merge, etc), and then push the code up to my repository.

All my repositories live in GitLab.

Whenever a push occurs on a specific branch, a set of events take place. What these events are depends on the branch, or if the code was tagged, or a bunch of other variables that I (or you could) control.

I’m fairly lazy.

Running the tests locally is a blessing and a curse.

My unit tests take almost no time (seconds).

My acceptance tests take ages, by comparison (10+ minutes not unusual).

I want to run my acceptance tests as much as possible, but I often don’t want to interrupt my entire work process whilst they complete.

Now, with some judicious use of tagging, I can run specific Behat features or individual scenarios as needed. But when I push my code up to the server, I want every test to run.

And that’s what CI brings for me.

It spins up a full environment – using Docker compose – and then does a full build of my project (composer install, etc), checking the security advisories, running static analysis, and then running all the tests – both unit, and acceptance.

At each stage I want to fail fast.

If any of my “stages” fail then the whole build aborts.

It took me about 6 weeks of persistent struggle to get to this stage. But it’s awesome now it works.

These 6 weeks enable me to be lazy. I can rest assured that even if I don’t do a full test run before committing, the GitLab runner will make sure the full test run occurs anyway.

And then I get a variety of alerts (and a failed build) if they do not pass.

The upshot of this is that I use the outcomes of these builds as my deployable Docker images.

If the build fails, I am completely unable to deploy this code.

It is not Ship-shape.

That’s my process, I’d love to hear about yours. I’m also open to any suggestions / feedback on what I have, so please do hit reply and let me know.

Video Update

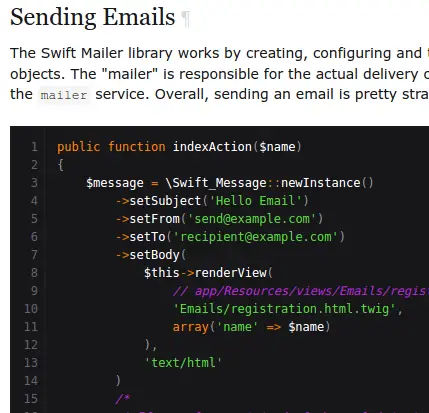

This week saw three new videos added to the site.

https://codereviewvideos.com/course/let-s-build-a-wallpaper-website-in-symfony-3/video/creating-our-wallpaper-entity

In this video we are going to start working with the database. We will create our first entity, allowing us to start saving Wallpapers to our database.

https://codereviewvideos.com/course/let-s-build-a-wallpaper-website-in-symfony-3/video/wallpaper-setup-command-part-1-symfony-commands-as-a-service

In this video you will learn how to generate a Symfony Console Command, and then how to set this Symfony Console Command as a Symfony Service.

https://codereviewvideos.com/course/let-s-build-a-wallpaper-website-in-symfony-3/video/wallpaper-setup-command-part-2-injection-is-easy

Learn how easy it is to inject parameters and Symfony services into your Console Commands. Also, learn about Symfony 3’s new kernel.project_dir parameter.

If continuous integration isn’t your bag, I’d really appreciate it if you could hit reply right now and let me know the biggest problem you’re having whilst learning Symfony.

As ever, thank you very much for reading.

Have a great weekend, and happy coding.

Chris