Ok, mega crazy title. And honestly, this is just the tip of the iceberg. Allow me to set the scene:

Lately I have had email conversations, read threads on hackernews, and even had a forum post challenging how and why I do things the way I do.

The summary of the email conversations being why I persist with Symfony / PHP generally, when other, “better” solutions exist. And the same can be said for the linked forum post.

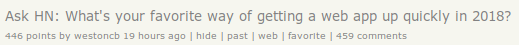

And then yesterday I saw that linked Hacker News thread:

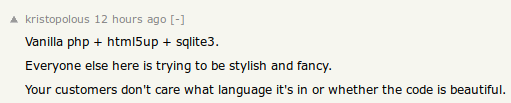

It was at about ~320 comments when I read it. The top reply was the most interesting for me:

There’s a bit more to it than that, and the thread itself is worth a read. There’s basically 400+ different suggested ways to “get a web app up quickly in 2018”. I’d disagree with a bunch of them, but then, they are the way I do things.

Wait, what?

Yeah, I’d disagree with Docker + Ansible + Terraform + nginx + (Symfony/Rails/Go/etc) + Postgres, etc, being quick to get up and running.

Sure, once you know the drill / have projects to copy / paste from, it can be quick, relative to the first time you had to learn and implement all this stuff. But it’s not quick quick. It still takes me ages.

And so I challenged myself: Just how quickly could I get a typical project up and running for myself? The perfect question for a Saturday night.

My Setup

The setup I most typically use is:

- Terraform for spinning up a server

- Ansible for prep’ing the box

- Docker for running stuff

- GitLab for code hosting + CI

- nginx for my web server

- Symfony / PHP 7 for the code

- Postgres for the DB

This is a lot of stuff, and it’s not super quick to set up.

This is why I started by mentioning the email / forum conversations whereby people ask: is Symfony / PHP the best tool of choice?

Well, maybe not. I don’t know. I just know I’m more productive with Symfony and PHP generally than everything else – though JavaScript is a close second.

Over the past few years I’ve tried other setups. It’s hard to invest time in learning another stack when the end result may be basically identical – what did I gain from the time invested? Could that time have been better invested elsewhere? Hard questions to answer.

But yeah, Node and more recently, Golang have been stronger contenders than usual for my attention. Anyway, that’s a bit of a digression.

The Problem

As mentioned above, that’s my stack. Learning it all took ages (years?), but as each project is, from an infrastructure point of view, very similar, I can now spin up a new environment very quickly.

My challenge was to find out how quickly. I got most of the core stuff up and running in ~1.5 hours.

I didn’t get the Behat testing environment set up in that time. Because I hit on an issue.

I wanted a simple JSON API as the outcome of this process. By simple I mean basically CRUD.

With the basic stack up and running, I created a basic entity (one property), and updated the DB accordingly. Doctrine was used for DB interactivity. Again, very typical for my projects.

In order to get data out of my repo, I needed to create a repository. There’s an awesome post on this by Tomas Votruba called How to use Repository with Doctrine as Service in Symfony.

As a side note here: if you haven’t already, I would highly recommend reading Tomas’ blog, as it’s jam packed with things you’d likely find very useful and interesting. Also, check out his GitHub projects, with Rector in particular being incredible.

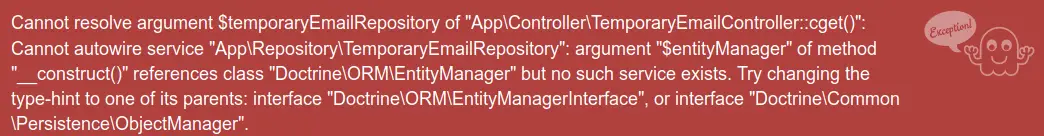

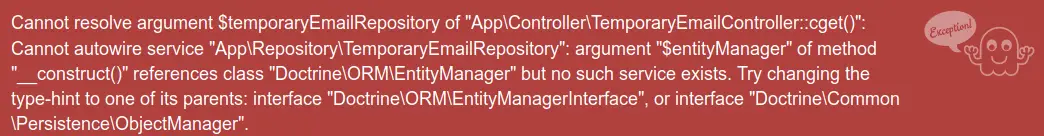

I followed the linked article, and hit upon the following:

Cannot resolve argument $temporaryEmailRepository of "App\Controller\TemporaryEmailController::cget()": Cannot autowire service "App\Repository\TemporaryEmailRepository": argument "$entityManager" of method "__construct()" references class "Doctrine\ORM\EntityManager" but no such service exists. Try changing the type-hint to one of its parents: interface "Doctrine\ORM\EntityManagerInterface", or interface "Doctrine\Common\Persistence\ObjectManager".

What was weird to me at this point is that I’ve followed this article before, but never hit upon any problems.

Anyway, I did as I was told – I switched up the code to reference the EntityManagerInterface instead:

<?php

declare(strict_types=1);

namespace App\Repository;

use App\Entity\TemporaryEmail;

use Doctrine\ORM\EntityManagerInterface;

use Doctrine\ORM\EntityRepository;

final class TemporaryEmailRepository

{

/**

* @var EntityRepository

*/

private $repository;

/**

* TemporaryEmailRepository constructor.

*

* @param EntityManagerInterface $entityManager

*/

public function __construct(EntityManagerInterface $entityManager)

{

$this->repository = $entityManager->getRepository(TemporaryEmail::class);

}

/**

* @return array

*/

public function findAll(): array

{

return $this->repository->findAll();

}

}

This is a really simple class.

For complete clarity, here’s basically the rest of the app at this point:

<?php

namespace App\Controller;

use App\Repository\TemporaryEmailRepository;

use FOS\RestBundle\Controller\Annotations;

use FOS\RestBundle\Controller\FOSRestController;

class TemporaryEmailController extends FOSRestController

{

/**

* @Annotations\Get("/")

*

* @param TemporaryEmailRepository $temporaryEmailRepository

*

* @return \FOS\RestBundle\View\View

*/

public function cget(TemporaryEmailRepository $temporaryEmailRepository)

{

return $this->view([

'data' => $temporaryEmailRepository->findAll(),

]);

}

}

And the entity:

<?php

namespace App\Entity;

use Doctrine\ORM\Mapping as ORM;

use Symfony\Component\Validator\Constraints as Assert;

/**

* @ORM\Entity(repositoryClass="App\Repository\TemporaryEmailRepository")

* @ORM\Table(name="temporary_email")

*/

class TemporaryEmail implements \JsonSerializable

{

/**

* @ORM\Column(type="guid")

* @ORM\Id

* @ORM\GeneratedValue(strategy="UUID")

*/

private $id;

/**

* @ORM\Column(type="string", name="domain", unique=true, nullable=false)

* @Assert\Url()

* @var string

*/

private $domain;

/**

* @return mixed

*/

public function getId()

{

return $this->id;

}

/**

* @return string

*/

public function getDomain(): string

{

return $this->domain;

}

/**

* @param string $domain

*

* @return TemporaryEmail

*/

public function setDomain($domain): self

{

$this->domain = $domain;

return $this;

}

/**

* @return array

*/

public function jsonSerialize(): array

{

return [

'id' => $this->id,

'domain' => $this->domain,

];

}

}

This is basically a generated entity with a couple of tweaks. It’s not the final form, so don’t take this as good practice, or whatever.

The purpose of what this class is supposed to do is also not relevant here, but will be discussed in a future video.

Anyway, the problem is evident in the code above. If you can spot it, then good stuff 🙂

If not, keep reading.

So with three records in the DB, all the connectivity setup, things looking decent, I sent in a request to my only endpoint – GET /.

And it didn’t work. I hit a 504 Gateway Timeout error from nginx.

2018/06/03 20:02:19 [error] 7#7: *25 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 172.18.0.1, server: temporary-email.dev, request: "GET / HTTP/1.1", upstream: "fastcgi://172.18.0.3:9000", host: "0.0.0.0:807

Very confusing, overall. I mean, this is basically copy / paste from a different project that works just fine. Only, I’ve renamed the project name. What the heck?

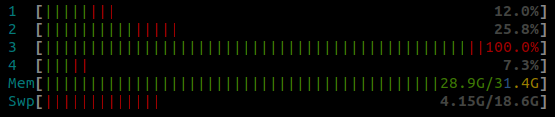

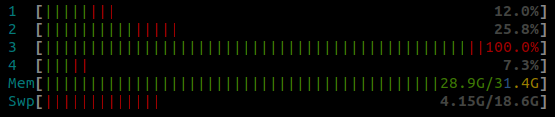

I hit refresh a few times, you know, to make sure the computer wasn’t lying to me. And then everything started going unresponsive. Very odd. I’ve just bumped the system from 16gb to 32gb, and all I have is a few Docker containers running, a browser with admittedly too many open tabs, and one instance of PHPStorm. Surely this couldn’t be taxing the system. htop told me a different story:

Yeah, I know, that swap size is ridiculous. Forgive me.

The nginx logs weren’t really that helpful. I needed to look at the PHP log output, which in this case is achieved via docker logs :

docker logs php_api_te

[03-Jun-2018 20:06:12] WARNING: [pool www] child 6 said into stderr: "NOTICE: PHP message: PHP Fatal error: Maximum execution time of 60 seconds exceeded in /var/www/api.temporary-email.dev/src/Repository/TemporaryEmailRepository.php on line 23"

Line 23 of TemporaryEmailRepository is:

public function __construct(EntityManagerInterface $entityManager)

I mucked around a bit, trying out injecting the ObjectManager instead, but hit the same issue.

Then I wondered if it was the act of injecting itself, or actually using the injected code (durr). So I commented out the call:

/**

* TemporaryEmailRepository constructor.

*

* @param EntityManagerInterface $entityManager

*/

public function __construct(EntityManagerInterface $entityManager)

{

// $this->repository = $entityManager->getRepository(TemporaryEmail::class);

}

Reloading now, I was no longer seeing the massive RAM spike, and looking at what that call was doing pushed me down the right lines.

I’ll admit, it took me a much longer amount of time than I’d of liked to realise my mistake:

/**

- * @ORM\Entity(repositoryClass="App\Repository\TemporaryEmailRepository")

+ * @ORM\Entity()

* @ORM\Table(name="temporary_email")

*/

class TemporaryEmail implements \JsonSerializable

Now, I’m not 100% certain on the conclusion here, but this is my best guess.

I believe I had created a circular reference. I’d injected the Entity Manager into the repo. Immediately I’d asked for the entity. The entity has an annotation pointing at the repo, which triggered the endless loop.

Anyway, removing the repositoryClass attribute fixed it up. Kinda obvious in hindsight.

The Conclusion

I’m convinced I could get an environment up faster than this. Without hitting this issue I believe I would be at the ~2 hour mark to go from idea to having a solid setup that’s good to write code in a sane, reproducible, reliable / testable way.

I think back to 10+ years ago, where I’d be up and running so much faster. PHP is essentially a scripting language. With shared hosting, you’d have the DB ready, the web server ready, you just needed to write a bit of code, connect to the DB, push the code up somehow (FTP :)) and bonza, you’re up and running.

Looking at that way now, I’m amazed how far I’ve come. There’s a massive overhead with using frameworks – time spent learning (which never stops, unless your framework of choice goes EOL), patching, managing all this stuff, learning new ways to make things better… is it all worth it? I think so.

I think the biggest takeaway for me lately is that whilst within the last ~5 years I’ve shipped a lot less code to prod than in the 5 years preceding this, the code I do ship is more stable, and maintainable.

Nagging in my mind, however, is that what’s the point in this slow, methodical approach if the end result is it takes so long, I either don’t bother with entire ideas, or by the time I’ve shipped them, I’m so burned out by the seeming complexity of the whole thing that I lose interest in taking them further.

Anyway, I appreciate this is half helpful, half rant. I just needed to blog it and get these thoughts out of my head.