I’ve recently been forced to migrate from Rancher v1.x to using docker-compose to manage my production Docker containers.

In many ways its actually a blessing in disguise. Rancher was a nice GUI, but under the hood it was a total black box. Also, fairly recently they migrated to v2, and no true transition path was provided – other than “LOL, reinstall?”

Anyway, one thing that Rancher was doing for me – at least, I think – was making sure the log files didn’t eat up all my hard disk space.

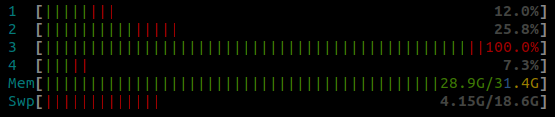

This one just completely caught me by surprise, as I am yet to get my monitoring setup back up and running on this particular box:

➜ ssh chris@1.22.33.44 # obv not real Welcome to chris server Documentation: https://help.ubuntu.com Management: https://landscape.canonical.com Support: https://ubuntu.com/advantage System information as of Wed May 13 12:28:24 CEST 2020 System load: 3.37 Usage of /: 100.0% of 1.77TB Memory usage: 13% Swap usage: 0% Processes: 321 Users logged in: 0 => / is using 100.0% of 1.77TB

Yowser.

There’s a pretty handy command to drill down into exactly what is eating up all your disk space – this isn’t specific to Docker either:

chris@chris-server / # du -h --max-depth=1 16G ./export 607M ./lib 60M ./boot 13M ./bin 5.1M ./etc 48K ./tmp 180K ./root 8.0K ./media 2.3T ./var 4.0K ./mnt 0 ./sys 4.0K ./srv 28K ./home 0 ./dev 59G ./docker 1.2G ./usr 13M ./sbin 0 ./proc 8.0K ./snap 4.0K ./lib64 316M ./run 16K ./lost+found 16K ./opt 2.4T .

The culprit here being /var with its 2.3T used… of 1.8T file system? Yeah.. idk.

Anyway you can keep drilling down with the disk usage command until you isolate the culprit. But as this is Docker related, I’ll save you the bother:

chris@chris-server /var/lib/docker/containers # du -h --max-depth=1 270G ./b3ec5a04079d5b3060d5575e011dd5e482950a794d52b4470659823dc5d5a6be 1.9M ./4b1eff96be524bdb20e87448520a30329a5e42bb36f9ba523f458d87cb8b5dc7 44K ./fc7552b499aca543a9bc6d8ef223a09d1ed21c4d054aa150b29c1d4023c151d9 40K ./43ef97d1a135f7404ca4a0330fb0ae6310ef87316358324a05bf6a65cc8d06b0 64K ./a0155805cc59d65b5815352f97939f12a4e87e30662eafa253ae8395eb553e0c 44K ./b4fd3f35324278dc832a5e98fe5a3e5655fecaf61d4de8c5e8080f8af63c22fe 40K ./b37593cd946407872e71c57af6d30b1c85c30fc42a8e075aad542fa820fbdc97 192K ./cb1e3eac9d1c1d997471f915d42fd0f999454fbee772ddc451f28e60ff1a4d22 281G ./3dd2886e627555cd6db45f68e0d1d9e520a6ab4ad443a2e43c70498b51b81fc4 1.9M ./552a0d7a7dd13afa65050381104e86395b2510c6437f650782260664ba195deb 40K ./111da287b97d0eff6b710cd7a9f0d80b7febd03c4ee30db8e2c0519e9a506c8a 40K ./47112f1fd4b0399c506fc1318ba2c0d03502b3ad731169f43a317a09556b2a6d 40K ./afeb8b8c2fbc918caaf199ae0aaca78f68e91412f9a7bc389963c6c15d6e2832 1.9M ./cb3c71ddb7181f9bbd3803cc644f36c05eedd922d4529c62354c68dedfc3bc02 624K ./c7ba5a354cdb2ab6f31fe443c04b72fad2a2e8107bc42a875422c4d80a164144 212K ./5d2b649bdb9eca4f0e14304e4ffad22b7d50d5c099a72072862a1135488105c6 56K ./781635651e0890695e1002639b5167cebf56e78fdeed3e839fc91c0681d9edd2 28M ./65aa3252acc8cf7a7af9301a0df9c117670c602f08bdb0f256eb37382a3eb859 68K ./8c8bf1febde03cf957cae5b695baf70427f6e2dec53d07d3a495ecbf2c9a3ac0 44K ./990279783af4cfacaa2e71c18eba0e6870d472dfd11e261e40a512779783fef5 40K ./37dfd1291842da4debff4c07c58d60b4efcd9e060ebfe29f1508bf418c284ca8 304K ./34c7bfcf01a6ca8e830e6108056eb0df70d4fe88eda699f3e3aa067c1afca2da 40K ./c7be4d913f95ffc040d72374bb77ad37a31846a80f40404c8b82bd5ec725d99f 1.9M ./af173be6ea5d961be308596a28e8039a20e38bc0facf623a65ee0894f28920e2 44K ./3cb4c8b263ca4c802b6df699510374929df50784b25ab8e63802ee8f2054ffc9 328K ./574599803c9143e2f87cabcc211fa3ee47f28f1e765298141f081fa1e457ced6 281G ./6bfcad1f93a7fffa8f0e2b852a401199faf628f5ed7054ad01606f38c24fc568 108K ./e5656136a35ffb242590abf50c203f5478e09bf954a6ca682cb391c11328d251 1.1M ./0150dafffbdbb830dc6ab158913eb8bd4003bf07e280579421aaeb29a7ac5623 271G ./0b5947f687fa47a70b71869468d851e4aeb3857a599a2d7eba8cf58f3b8d6bda 40K ./d014ae83b6314bcad0a159ae3cfad03a8589d4631e7c66a68ad55f1ad722f2fe 52K ./a85b7ab40a097b1548a8dea2826277742815a5e9b0c3c803b065504a2c526bb4 588K ./b5e0a0a4900e1c6fd066f5ae8d534bb832708f0caf917839c578d7debaea3783 52K ./53dd57dcbbca5248456423ebb4f0499b5d15eb5f4be7c0821979a7c880dbaa89 1.1T .

Son of a diddly.

Basically, this wasn’t caused by Docker directly. This was caused by my shonky migration.

The underlying issue here is that some of the Docker containers I run are Workers. They are little Node apps that connect to RabbitMQ, pull a job down, and do something with it.

When the brown stuff hits the twirly thing, they log out a bit of info to help me figure out what went wrong. Fairly standard stuff, I admit.

However, in this new setup, there was no limit to what was getting logged. I guess previously Rancher had enforced some max filesize limits or was helpfully rotating logs periodically.

In this case, the first port of call was to truncate a log. This might not actually be safe, but seeing as it’s my server and it’s not mission critical, I just truncated one of the huge logs:

/var/lib/docker/containers/6bfcad1f93a7fffa8f0e2b852a401199faf628f5ed7054ad01606f38c24fc568 # ls -la total 294559628 drwx------ 4 root root 4096 May 9 10:26 . drwx------ 36 root root 12288 May 9 16:50 .. -rw-r----- 1 root root 301628608512 May 13 12:44 6bfcad1f93a7fffa8f0e2b852a401199faf628f5ed7054ad01606f38c24fc568-json.log drwx------ 2 root root 4096 May 2 10:14 checkpoints -rw------- 1 root root 4247 May 9 10:26 config.v2.json -rw-r--r-- 1 root root 1586 May 9 10:26 hostconfig.json -rw-r--r-- 1 root root 34 May 9 10:25 hostname -rw-r--r-- 1 root root 197 May 9 10:25 hosts drwx------ 3 root root 4096 May 2 10:14 mounts -rw-r--r-- 1 root root 38 May 9 10:25 resolv.conf -rw-r--r-- 1 root root 71 May 9 10:25 resolv.conf.hash truncate --size 0 6bfcad1f93a7fffa8f0e2b852a401199faf628f5ed7054ad01606f38c24fc568-json.log

That freed up about 270gb. Top lols.

Anyway, I had four of these workers running, so that’s where all my disk space had gone.

Not Out Of The Woods Just Yet

There’s two further issues to address though at this point:

Firstly, I needed to update the Docker image to set the proper path to the RabbitMQ instance. This would stop the log file spam. Incidentally, within the space of truncating and then running a further ls -la, the log was already at 70mb. That’s some aggressive connecting.

This would have been nicer as an environment variable – you shouldn’t need to do a rebuild to fix a parameter. But that’s not really the point here. Please excuse my crappy setup.

Secondly, and more importantly, I needed a way to enforce Docker never to misbehave in this way again.

Fortunately, docker-compose has a solution to this problem.

Here’s a small sample from my revised config:

version: '3.7'

x-logging:

&default-logging

options:

max-size: '12m'

max-file: '5'

driver: json-file

services:

db:

image: someimage:version

environment:

BLAH: 'blah'

logging: *default-logging

worker:

image: path.to.my/worker:version

depends_on:

- rabbitmq

logging: *default-loggingOK, obviously a bit stripped down, but the gist of this is I borrow the config directly from the Docker Compose docs.

The one thing that I had to do was to put the x-logging declaration above the services declaration. Not sure why the order matters, but it didn’t seem to want to work until I made this change.

Once done, restarting all the Docker containers in this project (with the revised Docker image for the workers) not only resolved the log spam, but helpfully removed all the old containers – and associated huge log files – as part of the restart process.

Another fine disaster averted.

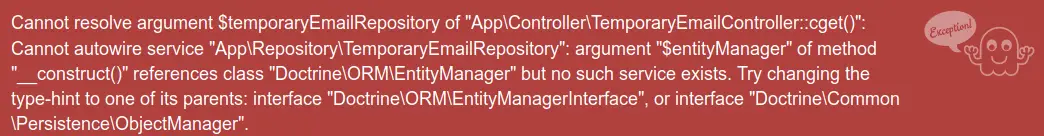

Behind the scenes over the past 10 weeks I have been working on integrating CodeReviewVideos with Braintree.

Behind the scenes over the past 10 weeks I have been working on integrating CodeReviewVideos with Braintree.